A survey on cutting-plane methods for feasibility problem

Abstract

Linear programming and feasibility problem are basic problems in convex optimization. So far, many algorithms have been developed to solve them. In this study-type project, we write a survey about a typical class of algorithms called cutting-plane method. The difference between cutting-plane methods lies in the way to select cutting point. We construct this report from the original cutting-plane method to the recent state-of-the-art method with an analysis on the running time and some proofs.

Introduction

In this blog, some cutting-plane methods are introduced. Generally, they are an important class of algorithm for analyzing feasible problem and linear problem. According to the way how the point is selected to feed oracle, there are cutting-plane methods using the center of gravity, the analytic center, the ellipsoid center, and the volumetric center, resulting in the difference on the number of separation oracle calls and the computing complexity for querying point. Among these methods, the optimal number for calling separation oracle is (the center of gravity), and the optimal method for computing querying point is (Lee, Sidford, Wong). In total running time, the best result so far is .

Feasibility Problem

Definition(feasibility problem): A feasibility problem is defined aswhere in each constraint, is convex function.

The goal is to find a feasible solution that can satisfy all the constraints. For linear programming, we have linear constraints, note as or .

From Feasibility Problem to Optimization Problem

Definition(convex optimization problem): A convex optimization problem is

As for convex optimization problem, the set given by constraints should be a convex set. Let this set named , and is the point in that minimizes , we assume that is contained in a ball with a small radius. The output of algorithms should be a point such that , and .

We can use the algorithm for feasibility problem in optimization problem with some modifications. From the algorithm, we obtain a feasible point . Instead of returning the solution, we compute a vector such that and replace the vector returned by the oracle with it. Once is available, the remaining parts are the same as those in the feasibility problem. During iterations, we keep a track of and record the best point . When the algorithm halts, we return as .

Cutting Plane Method

Cutting-plane method is a class of methods for solving linear programming or feasibility problem. The basic idea is using a hyperplane to separate the current point from the optimal solution. According to this note(boyd2011localization), cutting-plane methods have these advantages:

- Cutting-plane methods supports black-box property, in other words, these methods do not need the differentiability of the objective and constraint functions. In each iteration, we can use subgradient as the approximation of gradient.

- Cutting-plane methods which can exploit certain structure can be faster than a general purpose interior-point method.

- Cutting-plane methods do not need to perform evaluation of the objective and constraint functions in each iteration. Which contrasts to interior-point method that requires evaluating both objective and constraint functions.

- Cutting-plane methods is well suitable for scalability. They can be used in complex problems with a very large number of constraints by decomposing problems into smaller ones that can be solved in parallel.

Separation Oracle

The basic assumption for cutting-plane method is the existence of Separation Oracle for given sets or functions.

Definition(Hyperplane): A Hyperplane is the set of points satisfying the linear equation , where

Lemma: if is a convex set and is a given point, then one of the two condition holds

- .

- there is a hyperplane that separates from .

Definition(Separation Oracle for a set): Given a set and , a -separation oracle for is a function such that for an input , it either outputs “successful” or a halfspace with and .

Definition(Separation Oracle for a function): For a convex function , let and , a -separation oracle for is a function such that for an input , it either tell ifor outputs a halfspace such that

, with and .

Generally, we set , and note as the running time complexity of this oracle.

Cutting-Planes

To conclude, the feasibility problem can be described as to find a point in a convex set or assert that there is no feasible point in this convex set, i.e. the convex set is empty. The optimization problem is to find an optimal point in the convex set.

As an advantage of cutting-plane method, we do not need to get access to the objective and constraint functions except querying through an separation oracle. When cutting-plane calls a separation oracle at a point , the oracle should return either , or a non-empty separating hyperplane such that

We call the hyperplane as cutting-plane, as it determines the halfspace ,

for next iteration. Especially, by rescaling(dividing and by ), we can assume , this gives us the same cutting-plane.

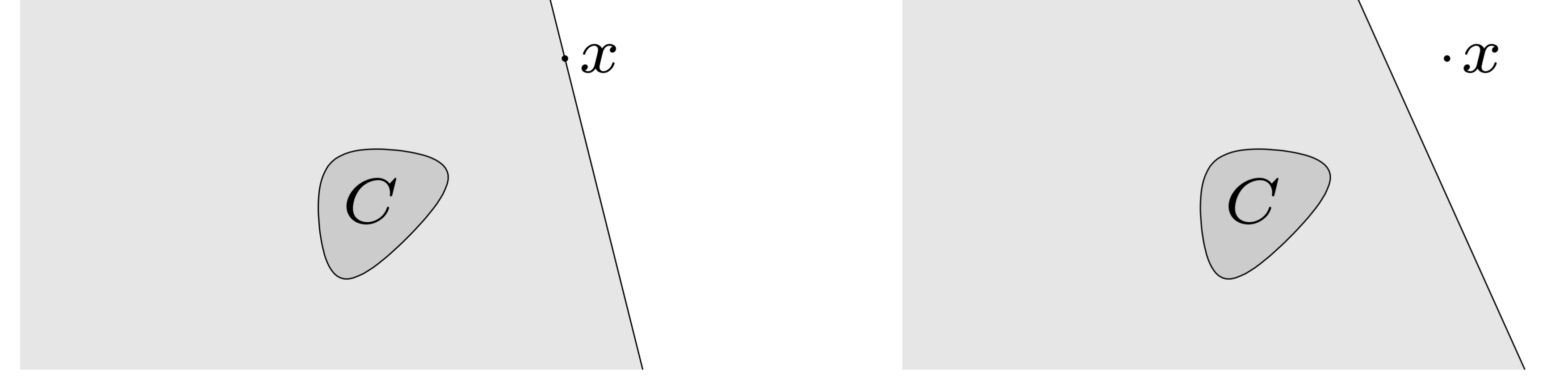

To be exact, if the cutting-plane contains the point , we note it as a neural cut. If the cutting-plane satisfies , we note it as a deep cut. As shown in Figure 1, a deep cut gives us a better reduction in search space.

Firgure 1 Left: a neutral cut. Right: a deep cut

When solving the feasibility problem, we proceed as follows: for an initial point , if satisfies , we return as a feasible solution. Otherwise, there is at least one constraint that violates. Let be the index of one violated constraint where ; be the subgradient value at , from the property of subgradient .

we know that once , then , and so is not a solution. Therefore, for any feasible , it should satisfy .

In other words, we can remove search space given by the halfspace . For multiple violated constraints, we can reduce search space by multiple hyperplanes.

To summarize the process of cutting-plane methods, the algorithm goes as

- given an initial polyhedron that contains ,

- Choose a point

- Query the oracle at , if the oracle tells , return

- Else, update by cutting-plane:

- Repeat until we find a feasible or is empty

Especially, as for a feasible problem, binary search can be regarded as a special cutting-plane method.

From these descriptions, we can know a good cutting-plane method should cut search space in each iteration as big as possible. In other words, the method should minimize the ratio . According to the strategy of choosing next query point, we have different cutting-plane methods.

Center of Gravity Method

A simple strategy for choosing query point is using the center of gravity. This method was one of the first cutting-plane method proposed by Levin(levin1965algorithm). In this method, we select the center of gravity as the query point , where the center of gravity is

Theorem(Grunbaum's inequality): Given a convex body , , let be any halfspace that goes through and divide into and , then .

Proof: First, let be a n-dimensional circular cone, be the left side of the cone ( is the axis of the cone aligned vertex which is at the origin). According to the property of centroid of cone,Since , then

As for any convex body, Without loss of generality, by affine transformation, the centroid can be changed to origin and the hyperplane used to cut it is . Then replace every -dimensional slice with an -dimensional ball with the same volume to get a convex body , using Brunn-Minkowski inequality, we can verify that is convex. This will not change the ratio of volume that contained in the halfspace. Therefore, we can construct a new cone according to : let , , we replace with a cone with the same volume. Similarly, we replace with an extension of the cone with the same volume. After transformation, the centroid of is changed from origin to be a little right along , note the new centroid as .

From previous result, we know that , therefore, .

Lemma: By applying Grunbaum’s inequality, the orable complexity of is , which means the number of iterations is linear.

Proof: According to Theorem(Grunbaum), we have, for a given error tolerance , assume

Therefore,

Although the center of gravity method only needs to call oracle within linear times, which achieves the optimal oracle complexity, we need to keep exactly the intersection of all separating hyperplanes and keep record of the feasible region. In addition, this method has a vital disadvantage: the center of gravity is extremely difficult to calculate, especially for a polyhedron in high dimensions. Computing the center of gravity may be more difficult than the optimization problem, which means it is not a practical method.

Analytic Center Cutting-Plane Method

As a variant method of the center of gravity method, the analytic center cutting-plane method(ACCPM)(boyd2008analytic) uses the analytic center of polyhedron as query point. In order to find the analytic center, we need to solve

where is the analytic center of a set of inequalities . The problem is that we are not given a start point in the domain. One method is to use a phase I optimization method described in (boyd2004convex)(Chapter 11.4) to find a point in the domain and perform standard Newton method to compute the analytic center. Another method is to use an infeasible start Newton method described in (boyd2004convex)(Chapter 10.3), to solve the problem. In addition, we can use standard Newton method to the dual problem.

The dual analytic centering problem is

The optimality conditions( are optimal for primal and dual) are

Dual feasibility needs

The Newton equation is

We can solve and let .

In order to judge when to finish Newton method, we need Newton decrement

When , we know is the analytic center, where

When , we know is primal feasible, where

The proof for convergence and the analysis of time complexity are a little complex, here we omit the process and take the proof result from Y. Ye(ye2011interior). This algorithm will terminate after of iterations. The number to call oracle is still linear.

This method was further studied and developed by Vaidya(atkinson1995cutting), which is introduced later.

Ellipsoid Method

Ellipsoid method was developed by Shor, Nemirovsky, Yudin in 1970s and used by Khachian to show the polynomial solvability of Linear Programming problems. Ellipsoid method generates a sequence of ellipsoids with a decreasing volume in that are guaranteed to contain sets of the optimal points. Assume there is an ellipsoid that is guaranteed to contain a minimizer of . In ellipsoid method, we compute the subgradient of at the center of ellipsoid, from the result of querying oracle, we then know the half ellipsoid

contains the solution. We construct a new ellipsoid with minimum volume that contains the sliced half ellipsoid. We repeat these step until the ellipsoid is small enough to contain a ball with radius .

Initially, we set as a large enough number such that , where .

To be exact, we can write the updating of ellipsoid method as a closed form. An ellipsoid can be written as , where which describes the size and shape of ellipsoid, the center of ellipsoid is . The volume of is given by , where is the volume of the unit ball in .

The half ellipsoid is

The new ellipsoid with minimum volume that contains the half ellipsoid is

where

According to these formulas, we can calculate the center and shape of ellipsoid in each iteration.

From the notes in this course, we know these theorems.

Theorem:

Lemma : The volume of ellipsoid decreases by a constant factor after iterations. The iteration number is

From the calculation process, ellipsoid method takes calls of oracle, which is far from the lower bound . We find that the separating hyperplane is only used for producing the next ellipsoid. In fact, ellipsoid method is theoretically slower than interior point method and practically very slow since it always does the same computation. However, ellipsoid method is still a quite important theory, as the black-box property enables us to solve exponential size linear programming problem.

The Volumetric Center Method by Vaidya

By maintaining an approximation of the polytope and using a different center, which is called volumetric center, Vaidya(atkinson1995cutting) obtained a faster cutting-plane method.

The idea comes from interior point method that to compute an approximation of the volumetric center. The approximation is obtained by using the previous center as the initial solution to compute the center of the current center by Newton method.

Let be the convex set which is approximated by a finite set of inequalities, so ( is an matrix and is an -vector).Let be a strictly feasible point in and . The logarithmic barrier for the polytope is

Therefore, based on the logarithmic barrier, we can obtain the volumetric barrier function for at point :

, where

By setting the in this form, in fact, is the inverse volume of the Dikin ellipsoid at point . Volumetric barrier can be regarded as a weighted log-barrier. By introducing the leverage scores of constraints

we can verify

In fact, Vaidya’s method produces a sequence of pairs such that the corresponding polytope contains the convex set of interest. The initial polytope is a simplex or a hypercude that is guaranteed to contain the convex set. In each iteration, we compute the minimizer of the volumetric barrier of the polytope given by and the leverage score at the point . The next polytope is generated by either adding or removing a constraint from current polytope.

Therefore, with these notations, Vaidya’s method can be described as:

- set a small value

- in each iteration, compute the volumetric center by taking as the initial point for Newton’s method

- if , simply remove the -th constraint

- otherwise let c be the vector given by the separation oracle, by choosing that satisfies we add the constraint given by to the polytope.

- return the best center during iterations.

The idea is straightforward: by introducing the leverage score which reflects the importance of each constraint, we can drop an unimportant constraint during iteration and the volume decreases with a value proportional to the leverage score; Otherwise this constraint is important, then we add it to the polytope.

Lemma: In Step.3, by removing the -th constraint, the volume of the Dikin ellipsoid does not change dramatically as

Lemma: In Step.4, by adding the -th constraint, the volume of the Dikin ellipsoid does not change dramatically as

Lemma: Vaidya’s method stops after steps. The computational complexity is

Vaidya’s method uses Newton’s method to compute the volumetric center, which could be very fast. The computational bottleneck comes from computing the gradient of , where we need to compute the leverage score . So far, the best algorithm for computing leverages scores can improve the computational complexity from to .

A Faster Cutting Plane Method by Lee, Sidford and Wong

This recent method by Lee, Sidford and Wong(lee2015faster) is built on top of Vaidya’s method focusing on the improvement on leverage scores. Although, instead of computing the exact leverage score, we can make an approximation with error and achieve time complexity, Vaidya’s method does not tolerate the multiplicative error. As a result, the approximated center is not close enough to the actual volumetric center, leading to a insufficient query to separation oracle.

Previously in Vaidya’s method, the volumetric center is defined as

where

Now for convenience, we note , then

Based on these fact, the authors came up with a hybrid barrier function as:

where is the weight which is chosen carefully so that the gradient of this function can be computed. For example, let , we reduce the problem as taking the gradient of . The authors also observe that the leverage score does not change greatly between iterations, then they suggests an unbiased estimates to the change of leverage score can be used such that the total error of the estimate is bounded by the total change of the leverage scores. In addition, instead of minimizing the hybrid barrier function, the authors set the weights so that Newton’s method can be applied, as the accurate unbiased estimates of the changes of leverage score is computed, the weights will not change dramatically.

To conclude, this hybrid barrier function takes these assumptions:

- The weights will not change too much between iterations. By carefully choosing the index, no weight will get too large.

-

The changing on weights makes a bounded influence on . To make sure this, the authors add a regularization term. The modified function is

- The hybrid barrier function is convex, this is guaranteed by setting such that .

Therefore, the algorithm can be described as

- Start from a Ball that contains , where stands for the feasible set. Set as threshold.

- In each iteration , we find such that

- .

- is small, say

- .

- Therefore, we store previous , update using dimension reduction.

- Check the error of estimation, if estimation is too bad, we compute it exactly(Set ).

- Similarly as Vaidya’s method, we use these approximated leverage scores to remove constraints or add constraints.

- return a feasible point or conclude that there is no such point.

In particular, the hybrid barrier function becomes

where is the variable we maintain. The reason why there is in the term is that the norm changes the Hessian of origin function, this term is added to reflect the change. Instead of maintaining directly, the authors maintain a vector that approximation the leverage score

Lemma(Time complexity): Let be a non-empty set in a ball with radius . For any , within expected time , this method can output a point or conclude there is no feasible point.

The clever idea in this method is that the authors keep a track on the change of , by setting correctly, they transform computing leverage score into a linear system problem. Therefore, compared to Vaidya’s method, where the leverage score is computed exactly, this method uses bounded approximation of leverage score, the time complexity in computing leverage score is reduced from , where to . Follow the same analysis process in Vaidya’s method, we can conclude that the general time complexity is .

In fact, all the theorems and assumptions are proved in the origin paper, as the proofs are complex and complete, please refer to the paper(lee2015faster) for more details.

A New Polynomial Algorithm by Chubanov

This method is a very recent result, the interesting part is the method does not need the notion of volume to prove the polynomial complexity.

The idea in this paper is based on rescaling(or space dilation) in ellipsoid method, but the final algorithm is a non-ellipsoidal algorithm that solves linear systems in polynomial oracle time.

Theorem(Caratheodory's Theorem): Let , as its convex hull, then

Proof: Carath'eodory’s theorem shows at most d+1 points are needed to describe C.Suppose x in a point in C and , x is the convex combination of at least d+2 points. for , consider they are affinely independent. Then there exists , not all zero, such that .

Since and not all are zero, there are positive and negative . Let , is in positive , one of the coefficients in is 0 and . What’s more, , here point x can be represented as combination of points without point . Then t could be reduced by 1. This process could be repeated as long as there are affinely independent points in the t points.

The maximum number of affinely independent points in is d+1, in other words, t could be only reduced to .

Lemma: for a matrix , any convex combination of the rows of A can be represented in the form where has no more than nonzero components, and satisfiesLet be the maximum entry of , from previous lemma, we know . Let be the related row in . For a vector , let , for any satisfies , we know

Lemma(oracle complexity): Given , within oracle calls and arithmetic computations, we can find a solution of feasible problem or a vector such that

Proof: During the iterations, when we check a nonzero vector, assume the -th is violated(otherwise we are done). Let be the vector that only the -th entry is 1 and 0s in other entries, we check whether . If so, we return as the solution, otherwise, let the new violated constraint as -th entry of A, i.e. . Let , whereFrom geometrical perspective, when the origin is orthogonally projected to , we obtain . If , we find a feasible solution. Otherwise, according to rescaling of , we can bound , we know

, where .

Then we set and repeat these steps. From this inequality, we know that the decreasing rate of , the number of iterations is bounded by . In order to compute at each iterations, we need to call the separation oracle and check every constraint, which results in arithmetic operations in each iteration. Since , the number of nonzero entries in increases at most 1 between iterations. Then a algorithm is used after iterations, where comes from the algorithm that solves . By taking all conditions, we obtain the wanted complexity analysis.

Consider two orthogonal vectors , we know that for any , x is uniquely represented by . Therefore, any function for and be regard as function for . Let be the linear operator as

Lemma: Let , set such that , then

Proof: As can be represented as , from , , , we know . Therefore,Then .

Consider the feasible constraints as . As the beginning of this method, it assumes the norms of the rows in is bounded in , if they are not in the range, simply normalize the coefficient vector.

The algorithm is described as

- Let begin as the identity matrix.

- Let be the matrix obtained from normalizing the rows such that . Given a vector , use a polynomial procedure to find a value such that . Then, . Set , find a nonzero vector such that satisfies or a vector such that for all with .

- if is found, return

- Otherwise, set

- repeat steps until, the number of iterations exceed limit.

From above setting, is feasible for if and only if satisfies this system(Let be violated at , then is violated at ). We use the separation oracle for and compute , where is computed from violated constraints and normalization procedure.

In the iteration, by setting , we make sure . from the result in Lemma, for each column in ,

Theorem(Sylvester's determinant identity): Given , the equation holds

Proof: Construct asas has its inverse ,

as has its inverse ,

Therefore,

Theorem(Hadamard's inequality): Let be column in , then

Proof: It is obvious that if , the inequality holds as norm is non-negative. When , this suggests that all columns in are linear independent. Therefore, we can orthogonalize these columns. Let , be the orthogonal project onto , and , then . Also, we haveLet be the matrix with columns , is obtained from by elementary column operations, then . Since the columns in are orthogonal, we have . Therefore,

According to Sylvester’s determinant identity, , therefore, according to Hadamard’s inequality,

After iterations, from previous analysis, we obtain

Lemma: Let be a feasible matrix with the maximum determinant during iterations, this algorithm can return a solution in calls in separation oracle and arithmetic operations.

Although the total time complexity is quite weak compared to previous cutting-plane method, the Chubanov’s method gives us an interesting perspective of the usage of separation oracle.

Conclusion

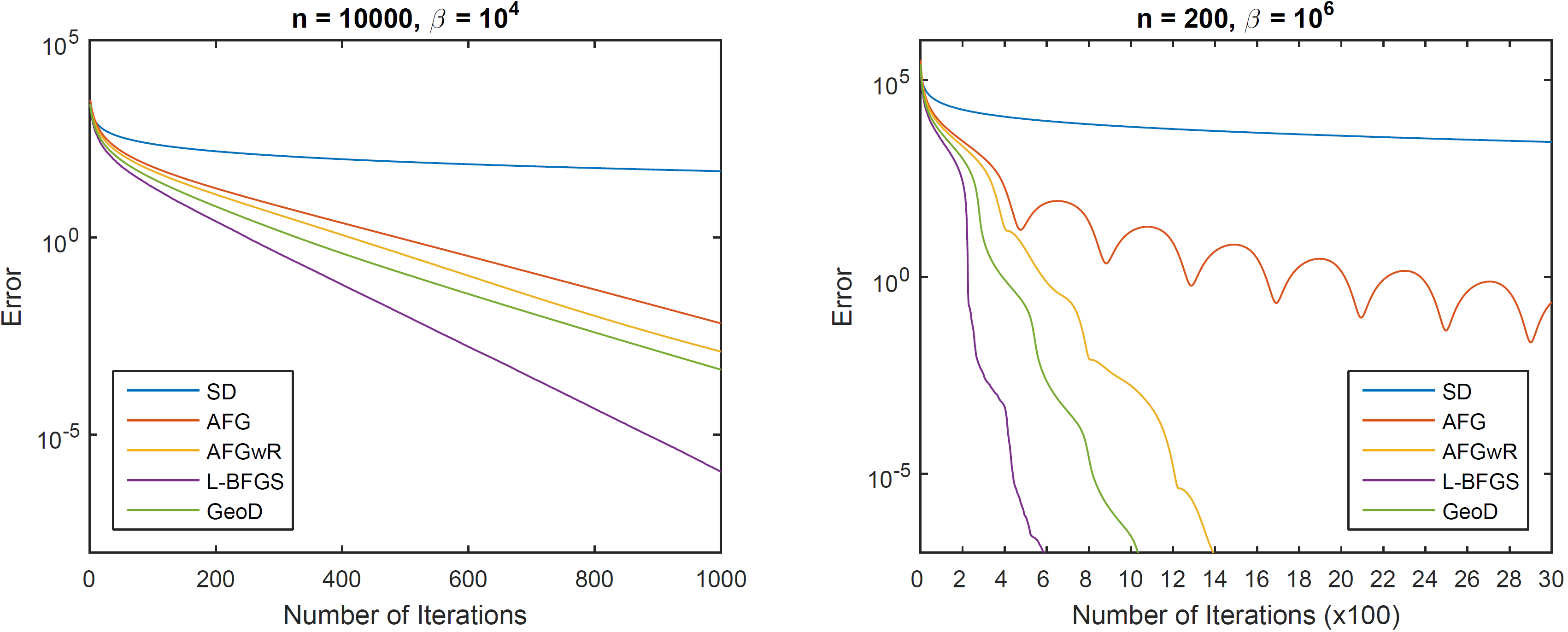

Most people may believe that cutting-plane method is only meaningful for theoretic analysis and unpractical for real applications. It is a common sense that compared to computing gradient or Hessian, maintaining the volume and center of ellipsoid is relatively slow. However, according to a recent paper by Bubeck, Lee, and Singh(bubeck2015geometric), cutting-plane method is suitable for real applications. For short, we name steepest decent as “SD”, accelerated full gradient method as “AFG”, accelerated full gradient method with adaptive restart as “AFGwR”. All of them are first-order optimization algorithms. In addition, we note quasi-Newton with limited-memory BFGS updating as “L-BFGS”, note geometric decent as “GeoD” which is exactly the cutting-plane method.

We know Newton’ method is the optimal bound for optimization problem and gradient decent with its variants are prevailing algorithms. The authors tested the performance of all algorithms on 40 datasets and 5 regularization coefficients with smoothed hinge loss function. The results are as follows:

Comparison of gradient methods and cutting-plane method

From this figure, we find that cutting-plane is surprisingly suitable for optimization problem in real application. As Hessian matrix in Newton’s method is extremely difficult to maintain; gradient descent should set up with a careful step size, cutting-plane methods has the advantages in converging speed and computing complexity. We may see more developments and applications of cutting-plane methods in the future.

Acknowledge

Thank Professor Lap Chi Lau for feedbacks and suggestions. Also, thank Professor Yaoliang Yu for inspiring and providing some helps during this blog.

Fishpond

Fishpond